Talk to AI like a colleague

From Zero to World-Class AI Manager - part 2

Another week, another study showing that most people still can’t figure out how to use AI to actually do their jobs better.

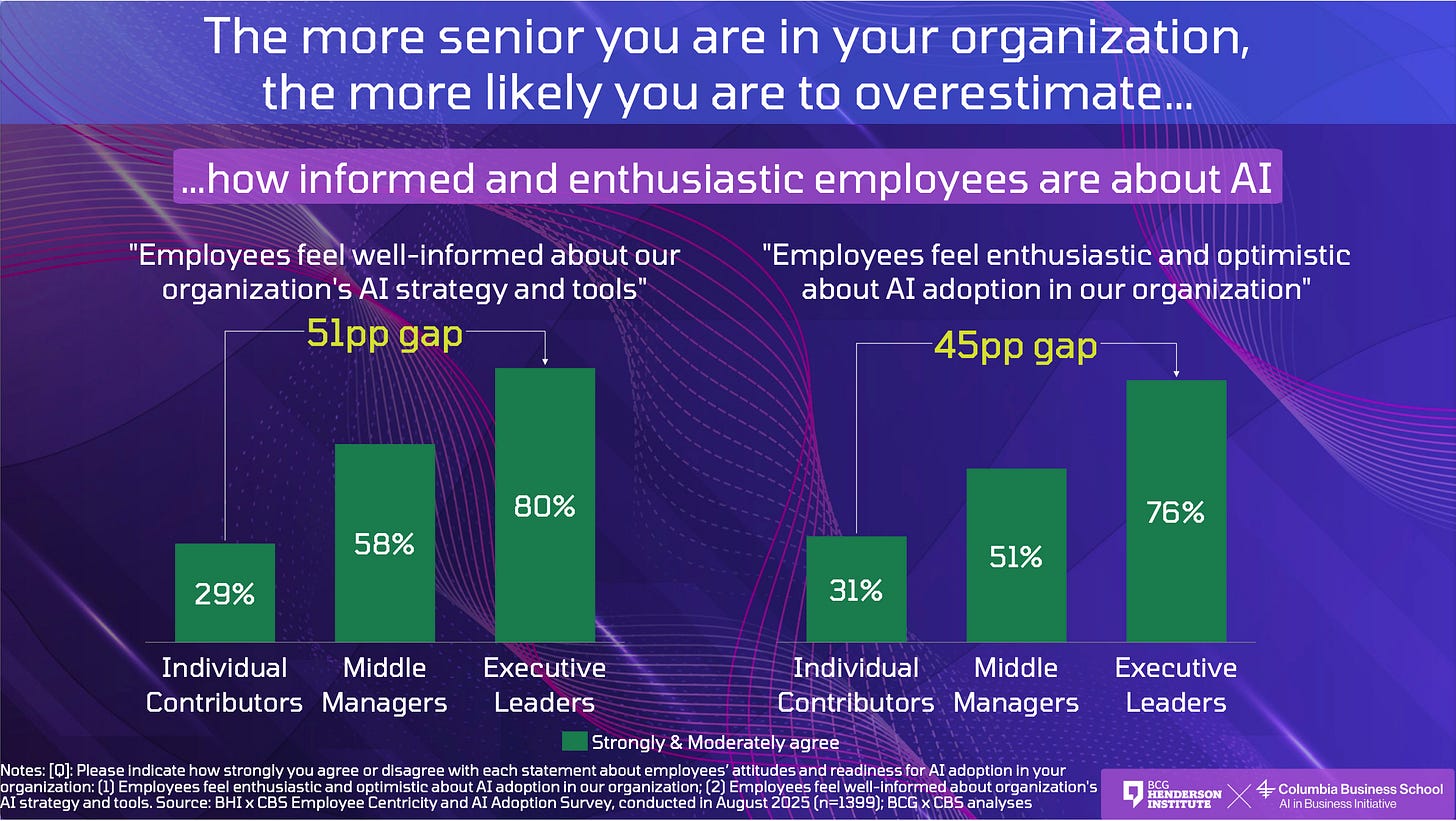

New research from BCG and Columbia Business School found that 80% of executives think their employees understand the company’s AI strategy. Only 29% of employees agree.

Leaders think 76% of employees are excited about AI. Employees say it’s closer to 31%.

Here’s the real problem - when people don’t understand how AI helps them personally, they don’t use it. And when they don’t use it, organisations stay stuck.

This series fixes that by teaching you to use AI on your actual work - one task at a time.

Last week, you mapped your work. This week, you’ll learn the foundational skill that makes everything else possible: how to brief AI properly.

Brief it like a colleague, not a search engine

Most people fail with AI not because the technology isn’t good enough, but because they treat it like a search engine.

You type “How can AI help me with meetings?” and get back a generic list of tips you already know.

The problem wasn’t the AI. The problem was the question.

If you asked a smart colleague “How can I improve meetings?” they’d say “Tell me more - what kind of meetings? What’s not working? What have you tried?”

AI needs the same context.

When you search, you type 2-3 keywords and scan results, which works fine for facts.

But AI can reason, synthesise, and create - if you give it enough to work with.

Most people type lazy prompts:

“How can AI help with client meetings?”

“Write me a strategy deck.”

“Summarise this article.”

These give you generic output because you haven’t given AI any context about your specific situation.

Instead, brief AI like you’d brief a competent colleague. Give context. Explain constraints. Define what good looks like. Give context. Explain constraints. Define what good looks like.

The five-part brief

Think carefully about how you’d brief someone on your team.

Just as your team-mate would ask clarification questions, you’d be more specific with you’re asking for. So, you wouldn’t just say “Sort my email.” You’d give them who you are and what you’re working on, what you’re trying to achieve, why the current approach isn’t working, what constraints you’re dealing with, and what a good result looks like.

That’s exactly how to brief AI:

Role - Who you are and your context

Goal - What you’re trying to achieve

Context - The current situation

Constraints - Deadlines, tools, format, tone

What Good Looks Like - The output you expect

Here’s the template:

I’m a [role] at a [company size/industry].

I’m working on [specific task] with [constraints, timelines, tools].

Current approach: [brief description]. This isn’t ideal because [why].

Help me improve by [specific improvement desired].

Return [structured format or bullet summary].

Then list the top 3 likely errors or missing assumptions I should verify.That last line is critical. Always ask AI what you should double-check.

Now, if you have time, copy-paste this scaffold into ChatGPT and fill it out for your own work before reading on.

Here’s an actual example to make it more real:

“I’m a sales lead at a SaaS firm preparing Q4 pipeline updates. I need to shorten my weekly report from 3 pages to 1 without losing key metrics. Help me summarise the data clearly for execs making resource decisions.”You’ve given AI your role, the problem, the constraint, and the audience. That’s what gets you useful output instead of generic advice.

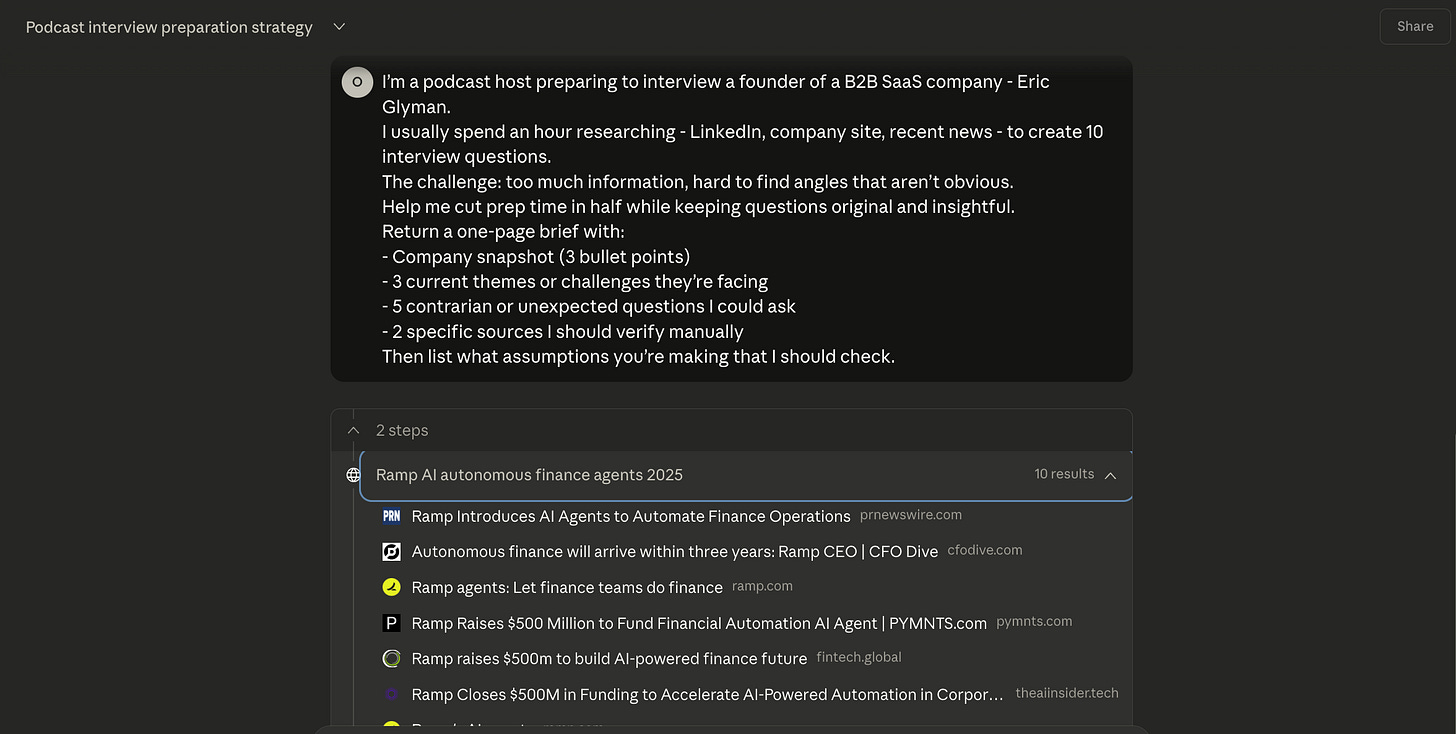

Here’s another one - I mentioned this example from my own work last week (I’ve used Ramp founder and CEO Eric Glyman as an example in Claude).

What I get back: structured brief with company context, intelligent questions I wouldn’t have thought of, and specific things to verify.

Interested in seeing the output? You can see the first draft of the full exec summary here.

Time saved: 30-40 minutes. And the quality’s better than what I’d have written myself, because AI pulls patterns from hundreds of similar companies I don’t have time to research.

AI didn’t hasn’t replaced me - it gave me a better starting point.

The trust test

Of course, you can’t believe everything you read. And that’s certainly true of AI. So, before using AI on anything important, test it on something you already know well.

Ask it to summarise your own brand strategy, draft your performance review process, or explain a framework you use daily.

You’ll immediately see where it gets basics right but misses nuance, where it overstates certainty, and where it suggests things that sound good but wouldn’t work in your context.

You’re building your internal ‘AI radar’ - knowing when to trust output and when to sanity-check it.

Iterate like you would with a person

And, it’s unlikely you’re going to hit the jackpot first time around. Most people type one prompt, get a mediocre output, and then give up.

But you wouldn’t do that with a colleague. Use natural follow-ups:

“What’s missing from this?”

“What would you prioritise first?”

“List the risks or trade-offs”

“Give me a version that’s 40% shorter”

“Reframe this for an executive audience”

“What’s the contrarian view here?”

You’re having a conversation. Each response helps refine your thinking.

My first prompt gives me structure. Second tightens it. Third catches what I missed. Etc etc. You can do this amazingly quickly (particularly if you use voice mode).

Your plan for this week

Here’s a 30-minutes plan of action:

Take your workflow from Week 1

Pick the most time-consuming or frustrating step

Use the five-part brief to describe it to ChatGPT, Claude, CoPilot or whatever you have access to

Ask: “How could AI help me do this faster or better?”

Try ONE suggestion manually

Record:

Time spent before vs after

Output quality (1-5)

Confidence level (1-5)

Bonus: Try the same task tomorrow, refining your brief based on what worked.

Next week

You’ve learned to brief AI properly. Next week, we’ll tackle one of the biggest problems in modern work - too many bad meetings.

I’ll show you how AI helps you prepare better, run tighter meetings, and figure out who actually needs to be there.

Good luck!

Ollie